Issues in the relationship between stats and the beautiful game, as observed by a player who has been quantified by them

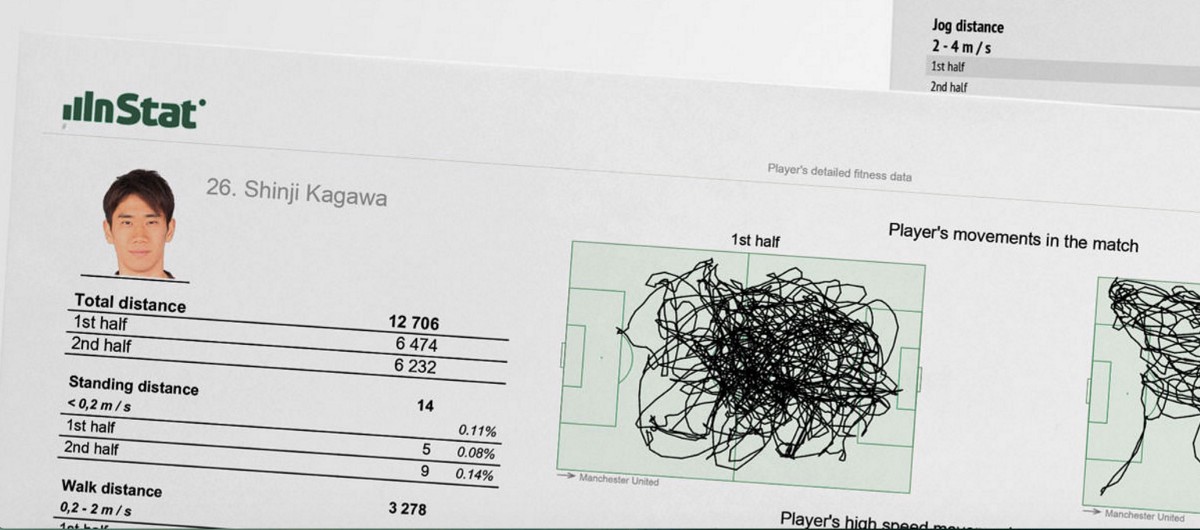

The rise of technology has brought about a change in the way we evaluate our sports. In similar fashion to the baseball book and film Moneyball, teams and scouts in all sports are shifting toward a more statistical and objective approach to assessing players. The advent of technologies like inStat Scout and Audi Player Index (API) brings statistical analysis to the forefront of player valuation. Software programs, like inStat, provide “all-in-one” analysis, stats and video breakdown for teams to use regarding their previous matches. Coaching staffs continually look for more statistical evidence when grading players and new technologies are now making that possible.

Let’s be clear: Soccer is nothing like baseball. In baseball, it’s simple to quantify almost all of the aspects of a game, so much so that it can be scored play-by-play with a statistical notepad. The lack of fluidity in baseball creates a breeding ground for objectivity. The game boils down to the pitcher vs. the batter and continually resets until three players are out. Soccer, on the other hand, is 90 minutes (plus an approximated amount of extra time) of near constant movement, adaptations and tactics. It’s a game that requires a proactive approach coupled with a malleable and reactive element, as well. The idiosyncrasies of the beautiful game are difficult to quantify in numbers and attributing calculated, arbitrary figures as the basis for holistic player evaluation is a flawed exercise.

Players are a part of a larger organism while on the field, so using the new technologies to quantify the play of individuals may not accurately depict the entire picture. Simultaneously running thousands of regressions to weed out the insignificant data could help, but that’s not enough. A series of algorithms would need to be introduced that connect and track all 22 players throughout the entire match in relation to each other and then give a statistical analysis on each player individually. This is essentially the conventional job of a scout. Even if these algorithms existed, the system would nevertheless be flawed. The coach’s tactical strategy and philosophy on the day might require individual players to play a “role” inside the system and thus require constant modification of the algorithms.

For example, some players are often asked to play different positions or play a distinct role in order to counteract what the opponent is trying to do. If Jesse Marsch, head coach of the New York Red Bulls and an excellent tactician, asks Felipe to track Sebastian Giovinco around the park for 90 minutes, it’s all but guaranteed that Felipe’s stat index is going to plummet that game. If Felipe does his job, shuts down Giovinco, and NYRB win the game, domestic and foreign scouts looking at the numbers alone might say that Felipe was one of the worst players on the pitch. The data means nothing and should be insignificant without the surrounding context. For this reason, objective conclusions of the game (and its players) should be pursued for serviceable purposes, but merely taken at their face value.

Another shining example of this in a recent match was Darlington Nagbe vs. Minnesota United in MLS week one. API rated Nagbe as the worst (in terms of numbers) player on the field in that half. Any semi-educated observer could see that simply was not the case. His runs off the ball, opening of space for teammates and tactical vision are not quantified in these numbers. The statistical analyses in these systems are attempting to calibrate an abstract game. Yes, the algorithm may be able to correctly identify the top 10 performers fairly accurately, but after that the data becomes helter-skelter.

My personal experience with inStat revealed these flaws to be bigger than I thought. It felt like my self-evaluation of the game was indirectly proportionate to my inStat score. When I felt I played well and the team won, my score was disappointing and vice versa. I went back to look through the inStat video replay system and noticed that a good chunk of the clippings were not of me, but of a teammate who happened to look similar to me. On top of that, players who break up passes and block crosses often get docked points for an “unconnected pass,” which, in context, may have been a fluky deflection or a header won off of a goal kick. The main problem isn’t having technology attempt to quantify useful information regarding the game, but rather coaches, teams and fans giving weight to these statistics and believing that they reflect the true value of a player.

Without question, statistical valuations have their rightful place in sports. However, the troubling problem lies in worshipping the data simply because it was collected. Numbers attempt to specify, classify and calculate the sport but they can’t explain the essence of what it is that we are witnessing. The fact that Barcelona’s win over PSG this past week made enough noise to register as a small earthquake on the Richter scale is remarkable and delightfully quantifiable. The direct cause of that earthquake was the immeasurable power of soccer expressed through the vocal cords and reverberated through the masses.

The examples are endless but the point remains: contextual subjectivity is the preferred method in establishing a player’s true value. This is why coaches prefer some players to others and why choosing the appropriate players to fit your team’s tactical philosophy matters more than computed appraisals.

I do concede that these analytics combined with additional factors such as salary, cap space, international roster spot vs. domestic player etc. can be useful in providing a more accurate valuation of a player. However, many (if not all) of the statistical programs do not filter these associations into the results. In fact, some of the technologies are simply GPS devices tracking the distance covered and heart rate of the athlete. Tracking distance covered is about gauging the individual’s endurance relative to others in his position and not about judging laziness or how hard a player is trying. Athletic trainers and fitness coaches use this data to see which players are working close to their max load and risking injury throughout the week and which players need to pick up their training intensity in relation to their baseline numbers.

In this respect, objective statistics are useful in evaluating and assessing the risk of injury and workload throughout the week. This does not, however, determine a precise metric of value on the player. A 16-year-old, high-school winger could run over 10 miles in a game but that doesn’t automatically qualify him to be considered by top-flight clubs. Yes, the distance covered by a particular player in a match is relevant to fitness standards set forth by the coaching staff but without the subjective context of the game, the numbers mean nothing. A tactically sharp player may not need to run as much as others because his anticipation, vision and knowledge of the game allow him to be in the right spot on the pitch. Furthermore, these are the traits that separate the good from the great players.

Proper, tangible measurements of a player’s ‘soft’ skills are currently nonexistent. Passion, resiliency, leadership, communication and awareness are all distinct traits of some of the best players in the world and not merely cliches used to sell sports drinks. Looking at a spreadsheet or heat map on Twitter can’t adequately represent the center midfielder who influenced the game by shutting down the opposition’s best player while simultaneously inspiring his team to victory. The numbers also won’t show what goes on inside the locker room, what type of character the individual player has, how the player responds to a mistake or poor performance the previous week, and also the leadership he brings to the table. These are concepts that must be observed using the sensitivity of the human eye, not a camera lens. Soccer is a particular experience that can’t be fully appreciated through computation alone.